What is inference in machine learning? It’s the captivating journey of transforming trained models into prediction powerhouses, unlocking the secrets of unseen data. Dive into this enthralling narrative as we explore the fascinating world of inference, its techniques, applications, and the challenges it presents.

From deciphering natural language to guiding medical diagnoses, inference empowers machines to make informed decisions, enabling a future where AI’s wisdom seamlessly intertwines with our own.

Introduction

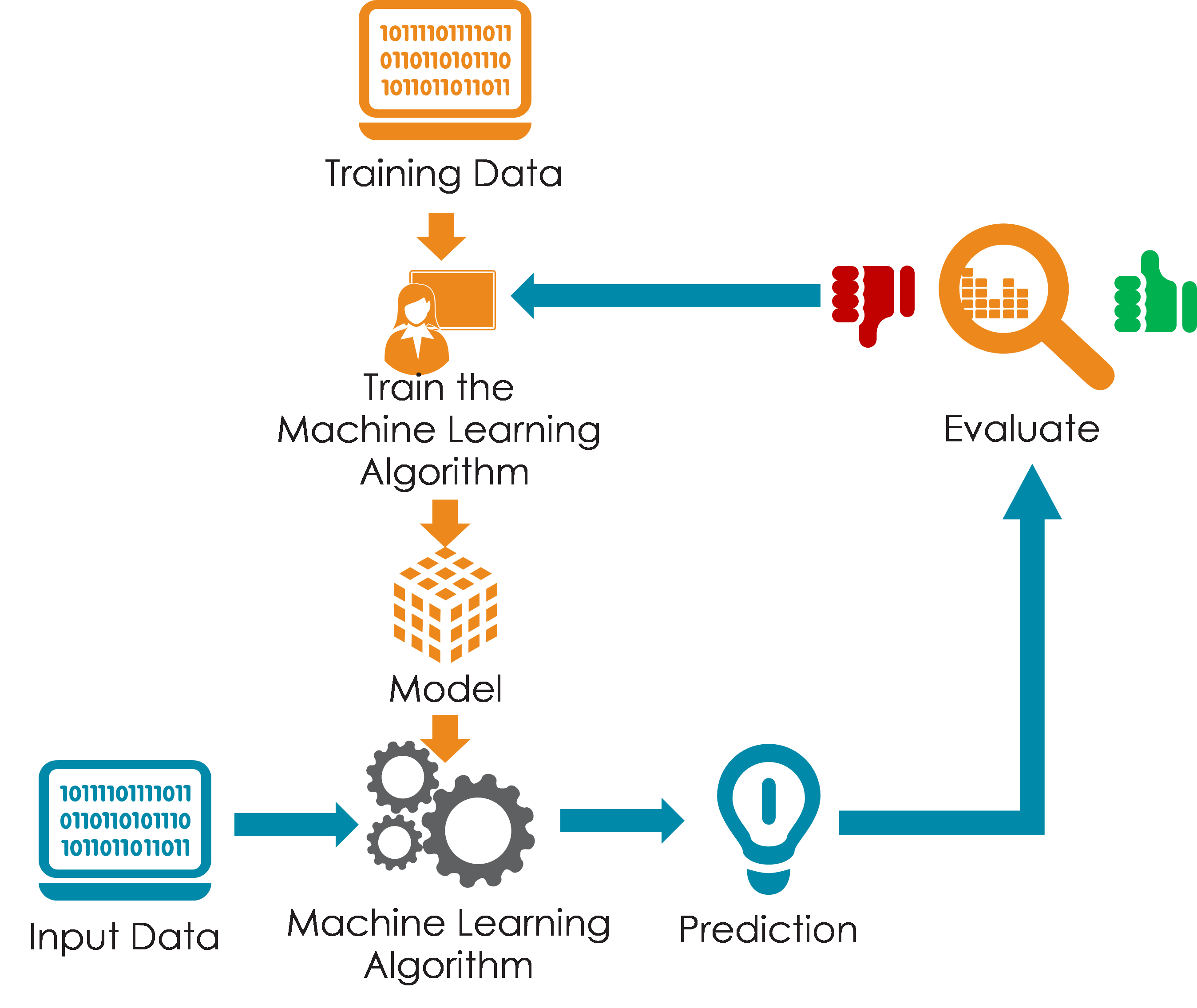

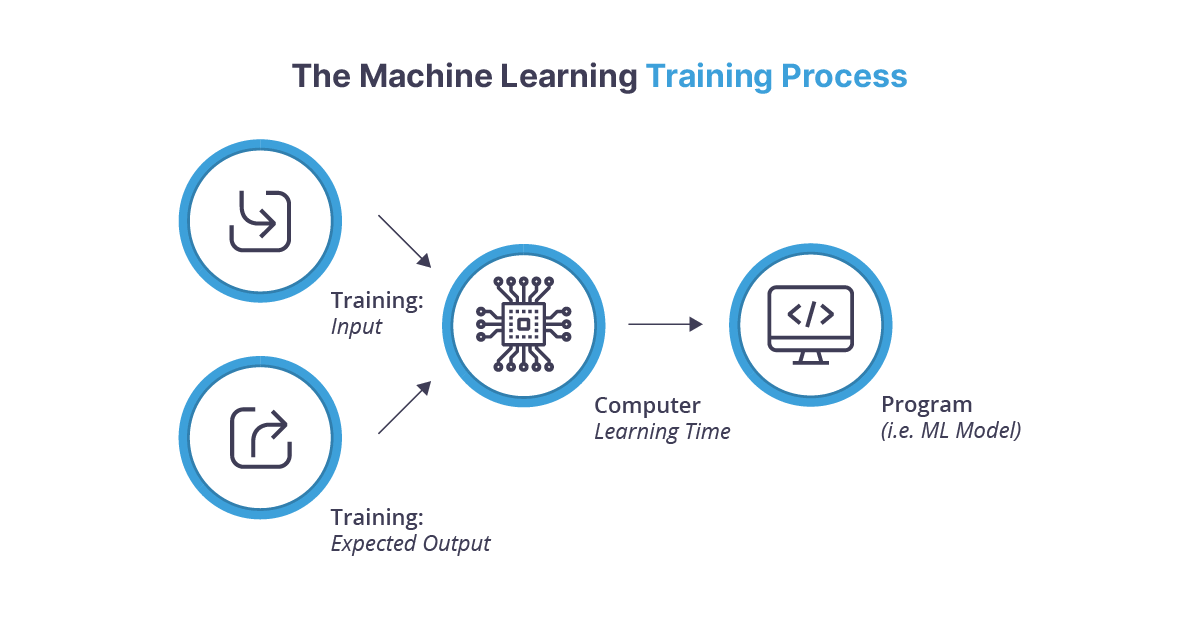

Inference in machine learning refers to the process of making predictions or estimations based on a trained model. It involves using the model to analyze new, unseen data and draw conclusions or make predictions about that data.

Inference plays a crucial role in predictive modeling, as it allows us to apply the knowledge learned from the training data to new situations. The model, after being trained on a dataset, gains the ability to recognize patterns and make predictions.

Inference is the mechanism through which we utilize this trained model to make predictions or estimations on new data.

Types of Inference

There are three main types of inference in machine learning:

- Inductive Inference:This type of inference involves making generalizations based on observed data. It assumes that patterns observed in the training data will also hold true for unseen data. Inductive inference is commonly used in supervised learning, where the model is trained on labeled data and then used to predict labels for new data.

- Deductive Inference:This type of inference involves making conclusions that are logically guaranteed to be true based on given premises. It is often used in rule-based systems or logical reasoning tasks. In deductive inference, the conclusion is a direct consequence of the premises, and its truth is guaranteed if the premises are true.

- Abductive Inference:This type of inference involves making the best possible guess or explanation based on available evidence. It is often used in unsupervised learning, where the model is trained on unlabeled data and then used to identify patterns or make predictions.

Abductive inference is also known as “inference to the best explanation” and is used to find the most likely explanation for a given set of observations.

Methods of Inference

Inference is the process of making predictions or drawing conclusions from data. In machine learning, there are two main approaches to inference: the Bayesian approach and the frequentist approach.

Bayesian Approach

The Bayesian approach to inference is based on Bayes’ theorem, which provides a framework for updating beliefs in light of new evidence. In the Bayesian approach, we start with a prior distribution, which represents our beliefs about the parameters of the model before we see any data.

We then update this prior distribution using the data we observe to obtain a posterior distribution, which represents our beliefs about the parameters after seeing the data.

Frequentist Approach

The frequentist approach to inference is based on the idea of sampling distributions. In the frequentist approach, we assume that the data we observe is a random sample from a larger population. We then use the data to estimate the parameters of the population.

The frequentist approach does not explicitly consider prior beliefs, and it assumes that the parameters of the model are fixed.

Comparison of the Bayesian and Frequentist Approaches

The Bayesian and frequentist approaches to inference have different strengths and weaknesses. The Bayesian approach is more flexible and can incorporate prior beliefs, but it can be more computationally expensive. The frequentist approach is less flexible, but it is often more computationally efficient.

Applications of Inference

Inference in machine learning finds widespread applications across various domains, enabling us to make informed decisions and predictions based on learned models.

Let’s explore some notable examples:

Natural Language Processing, What is inference in machine learning

- Text Classification:Inferring the category or topic of a given text, such as spam detection, sentiment analysis, or topic modeling.

- Machine Translation:Translating text from one language to another, using statistical models to infer the most likely translation.

- Question Answering:Extracting relevant answers from large text corpora by inferring the most likely response to a given question.

Computer Vision

- Object Detection:Identifying and localizing objects within an image or video, inferring their presence and location.

- Image Segmentation:Dividing an image into distinct regions or segments, inferring the boundaries and characteristics of different objects.

- Facial Recognition:Verifying or identifying individuals based on their facial features, using inference to match a given face to a known identity.

Healthcare

- Disease Diagnosis:Inferring the likelihood of a specific disease based on a patient’s symptoms, medical history, and test results.

- Treatment Planning:Determining the optimal treatment options for a patient, considering their condition, response to previous treatments, and potential side effects.

- Drug Discovery:Identifying potential new drug candidates by inferring the properties and interactions of molecules based on existing data.

Decision-Making and Forecasting

Inference plays a crucial role in decision-making and forecasting:

- Predictive Analytics:Using inference to predict future outcomes or trends based on historical data and patterns.

- Risk Assessment:Inferring the likelihood of a specific event occurring, such as the risk of a loan default or the probability of a natural disaster.

- Optimization:Making decisions that maximize a desired outcome, using inference to evaluate different options and select the best course of action.

Challenges in Inference

Making accurate inferences is a fundamental challenge in machine learning. Two common pitfalls are overfitting and underfitting. Overfitting occurs when a model is too complex and fits the training data too closely, resulting in poor performance on new data. Underfitting, on the other hand, occurs when a model is too simple and fails to capture the underlying patterns in the data, also leading to poor performance.

Evaluating the accuracy of inferences is crucial. Common metrics include mean squared error (MSE), root mean squared error (RMSE), and mean absolute error (MAE). These metrics measure the difference between the predicted values and the true values, providing insights into the model’s predictive performance.

Overfitting

- Occurs when a model is too complex and fits the training data too closely.

- Results in poor performance on new data.

- Can be mitigated by techniques like regularization and early stopping.

Underfitting

- Occurs when a model is too simple and fails to capture the underlying patterns in the data.

- Also leads to poor performance.

- Can be mitigated by increasing the model’s complexity or using more training data.

Evaluation Metrics

- Mean Squared Error (MSE): Measures the average of the squared differences between predicted and true values.

- Root Mean Squared Error (RMSE): Square root of MSE, providing a measure of the standard deviation of the errors.

- Mean Absolute Error (MAE): Measures the average of the absolute differences between predicted and true values.

Future Directions in Inference

Inference in machine learning continues to evolve rapidly, driven by advancements in computing power and the availability of massive datasets. Emerging trends and advancements in inference techniques hold great promise for further enhancing the capabilities of machine learning models.

One of the most significant trends in inference is the increasing use of approximate inference methods. Approximate inference techniques, such as variational inference and Markov chain Monte Carlo (MCMC), allow researchers to perform inference on models that are too complex or computationally expensive to train exactly.

These techniques have the potential to significantly expand the range of problems that can be solved using machine learning.

Another important trend in inference is the development of new algorithms for online and real-time inference. Online and real-time inference is becoming increasingly important as machine learning models are deployed in a wider range of applications, such as autonomous vehicles and medical diagnosis.

These applications require inference algorithms that can operate in real time and adapt to changing conditions.

Potential Applications of Advancements in Inference

The advancements in inference techniques have the potential to revolutionize a wide range of fields, including:

- Healthcare:Inference techniques can be used to develop more accurate and personalized medical diagnosis and treatment plans.

- Finance:Inference techniques can be used to develop more sophisticated financial models and risk assessment tools.

- Transportation:Inference techniques can be used to develop more efficient and safer transportation systems.

- Manufacturing:Inference techniques can be used to optimize manufacturing processes and improve product quality.

- Retail:Inference techniques can be used to develop more personalized and targeted marketing campaigns.

Questions Often Asked: What Is Inference In Machine Learning

What is the role of inference in machine learning?

Inference is the process of using trained machine learning models to make predictions on new, unseen data.

What are the different types of inference?

There are three main types of inference: inductive, deductive, and abductive.

How is inference used in decision-making?

Inference provides a basis for making informed decisions by predicting the likelihood of different outcomes based on available data.